How do Makers Build Voice Control with ReSpeaker?

(The original Chinese version of this article is written by Hsiang-yang Lu and published on MakerPRO)

Speech recognition has been widely applied these years. Apple launched the voice assistant, Siri, for iPhone in 2011; then Google and Microsoft also released Google Now (2012) and Cortana (April, 2014) respectively.

In November 2014, Amazon released Echo family robot (USD$179) that made the speech recognition applications from mobile devices to appliances in living rooms. In March 2016, the mini version of Echo, Echo Dot, was released (USD$49), and the mobile version, Amazon Tap (USD$129) was launched as well. With the releases of new devices, the speech recognition assistive devices have been widely applied.

Maker’s Speech Recognition Development Kit: ReSpeaker

The speech recognition applications we have mentioned above are mostly applied to search the Internet, turn on and use app, or get speech response. However, the technologies are rarely applied to manipulate appliances and IoT devices. Thus, SeeedStudio launched ReSpeaker development kit in November 2016, which can recognize speech and also allows makers to control appliance and IoT devices with hardware control circuit development.

How can ReSpeaker be applied to speech recognition extensively?

ReSpeaker has utilized lots of existing technologies. The development board uses the same controller, ATmega32u4, as Arduino so that those makers who are familiar with Arduino can apply their existing control circuit design to ReSpeaker. Meanwhile, this board also provides the interfaces of I2C, AUX, USB for makers to have more extensions to access electronic peripherals.

In terms of Wi-Fi, ReSpeaker uses MediaTek MT7688. This chip used to be applied to home Wi-Fi router only but was redesigned to be LinkIt Smart 7688/7688 Duo by referring to Linino ONE and Arduino Yun. Then, the chip becomes quite popular in the maker community.

Software and hardware integration brings huge potential

To have MT7688 perform more stably, the chip utilizes AcSip’s SiP packaging technologies to package the required circuit in a module, which will be then delivered to SeeedStudio to produce ReSpeaker.

Therefore, the voice made by users will be received by MT7688 first and then transmitted to the Internet via Wi-Fi. The voice will be processed with cloud services on the Internet and the response will be sent back. MT7688, then, makes the sound from the speaker or transfers the response to signals to control the appliances.

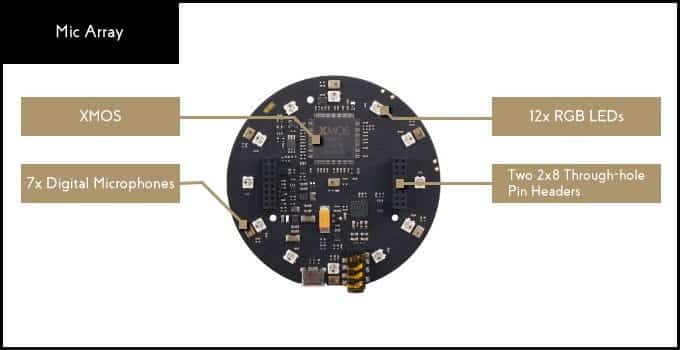

What should be noted is ReSpeaker receives the voice from a microphone only. It’s more suitable for users who make the voice close to the microphone and with little background noise. If you would like to make the voice far away or in a noisier environment, it’s suggested to use Array MIC, which is the optional accessory of ReSpeaker.

Here is the video of testing:

As to the development environment of control applications, ReSpeaker and LinkIt Smart 7688/7688 Duo both use the embedded Linux operating system, OpenWrt. The supported programming languages include Python, C/C++, Arduino, JavaScript, and Lua.

Speech recognition service is the key point

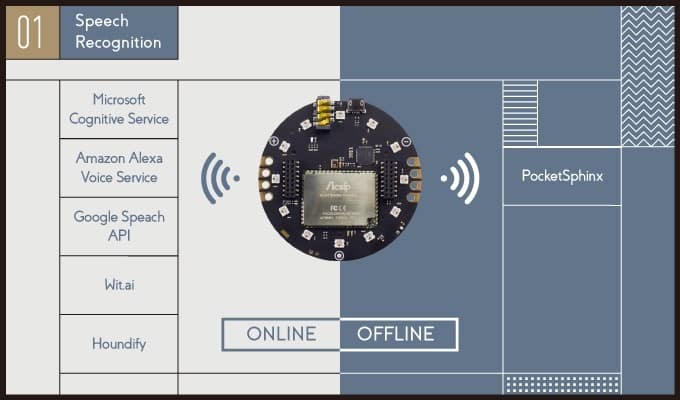

We have talked about the combination of software and hardware. What about the speech recognition? ReSpeaker does not have its own speech recognition technologies but calls the voice cloud services from well-known technology companies, such as Cognitive Service from Microsoft, Alexa Voice Service (AVS) from Amazon, Search API from Google, Wit.ai, and Houndify.

As ReSpeaker cannot access the Internet and call speech recognition services, PocketSphinx can be called in the off-line mode. ReSpeaker will process and recognize the voice and then take the actions after understanding the meaning.

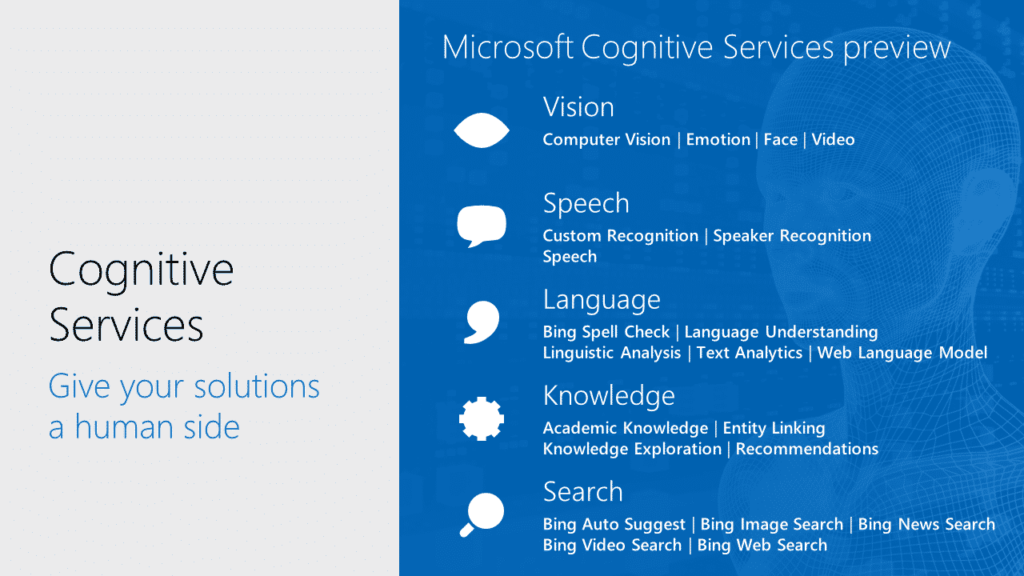

Take Cognitive Service from Microsoft for example. Microsoft Cognitive is composed of Bing Speech API, Custom Speech Service, and Speaker Recognition API. The definitions are listed below:

• Bing Speech API transfers the voice into texts, sends them to Bing search engine, and tries to figure out the meaning of the speech.

• Custom Speech Service is a more advanced recognition that could remain high accuracy in a noisy environment or voice from different directions.

• Speaker Recognition API can distinguish which family member made the voice, grandfather or grandmother.

In fact, Microsoft Cognitive Service not only has speech recognition but also visual recognition, language recognition, knowledge recognition, search recognition. Different from speech recognition, language recognition is to distinguish if there’s typo in a paragraph, to tell the language of the text and to find out the keyword from a paragraph. These could be tags for categorization and search.

What should be noted is that Microsoft Cognitive Service pursues revenue for sure. Users can apply the service but with limits. Take Bing Speech API for example. Users can call the service for free 5 times and need to use it for no more than 15 seconds and fewer than 1000 characters each time. If your usage exceeds, it will charge you USD$4 for every 1000 time to call the service, USD$9 for less than 10 hours, USD$7.5 for 10 – 100 hours, and USD$5.5 for more than 100 hours.

Other services are similar. Free services impose limits on the use, such as Amazon and Google. Moreover, Amazon supports the recognition in English and German so far.

Ubiquitous Potential in New Applications

Speech recognition can be applied not only voice control but also other fields, like the KOTOHANA technology developed by SGI Japan. Through speech recognition, it can distinguish speaker’s emotions, e.g. nervous, happy, or angry. It has 10 levels for each emotion. This technology will be transferred to NEC later on.

Emotion distinguishing can be applied to various scenarios. For example, in a car, if the driver feels excited (he/she may have lower concentration, the car security system (ABS and AirBag) will sense more strictly. If the device is in a restaurant, it can sense the atmosphere, noisy or silent, to change the lighting and music. Also, it can be applied to assist customer services to make sure they have comforted the callers’ emotion. Also it can help e-pet to understand each family member’s emotion and to comfort them.

In fact, as a new human machine interface is launched, it might change people’s behaviors and even become a new model for technology application. iPhone in 2007 is the best sample of the influence on touch panel. Now the speech recognition has become popular and might be applied to wearables and IoT. Makers’ creativity and technologies could accelerate the development.

Lastly, the technologies from speech recognition to language recognition requires lots of efforts on software and algorithms. But there seems to be fewer people devoting to the hardware development.